The most advanced virtual set and AR solution

InfinitySet is designed to cope with any requirement from advanced tracked virtual sets to inexpensive trackless environments. With practically infinite cameras and the industry-first TrackFree™ technology which allows combining tracking and trackless environments for further flexibility, Infinity Set is the most advanced solution available today for virtual set production.

Beyond Virtual Production

InfinitySet performs at its best within complex broadcast environments. It works as a hub system for a number of technologies and hardware required for virtual set and augmented reality operation, because virtual set production is much more than just placing talents over a background.

These include from tracking and control devices and protocols to mixers, chroma keyers, NRCS workflows for journalists and many more that configure the broadcast virtual production environment.

InfinitySet allows for multiple input sources, no matter if they are real cameras or video feeds, and the resulting scenes can be as complex as required, with multiple elements interacting in the virtual world, and even matching the depth-of-field of the presenter with that of the scene. Such flexibility allows InfinitySet to perform at its best in other environments such as drama or film production, for previz or finishing.

Advanced, data-driven motion graphics integration

InfinitySet not only seamlessly integrates Aston projects, but is also fully compatible with Aston, including the project’s StormLogic interaction logic, and features a complete 2D/3D graphics creation toolset, even with automatic external data input.

InfinitySet 4 goes one step beyond, being able to send Aston projects directly to UE as textures, including its StormLogic, or alternatively using an Aston as a layer over UE.

Photorealistic, accurate Augmented Reality

As most Augmented reality content requires advanced graphics features to guarantee the accuracy and realism of the result, InfinitySet now takes advantage of the Aston graphics creation and editing toolset to achieve this.

Also, set space restrictions are no longer a problem, because, regardless the camera we are shooting is fixed, manned, tracked or robotic, InfinitySet can virtually detach the camera feed while maintaining the correct position and perspective of the talent within the virtual scene.

New on InfinitySet 4

InfinitySet 4 is a major update that includes a number of unique features designed to enhance content creation and output. Apart from a tighter integration and control of Unreal Engine (UE), Suite 4 provides advanced features like simultaneous renders, multiple outputs and additional hardware support.

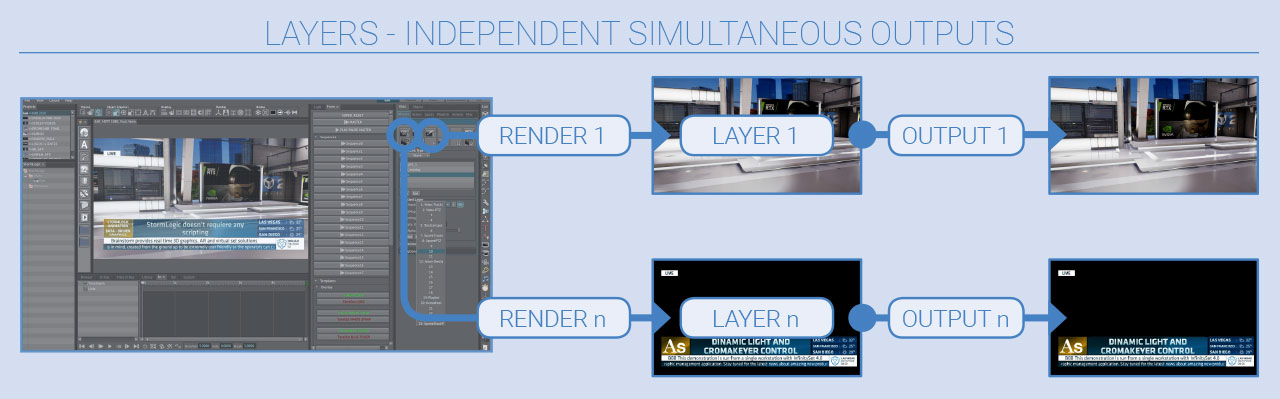

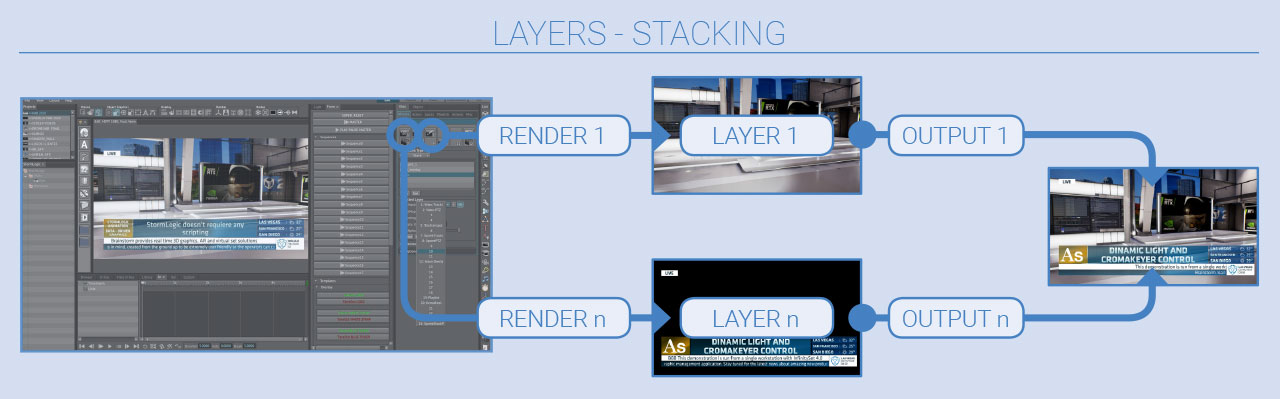

Layers

Layers allow for creating simultaneous multiple renders using a single workstation. Using Layers, InfinitySet can now deliver several video outputs from a single instance or combine several crosspoints in a single video output (stack).

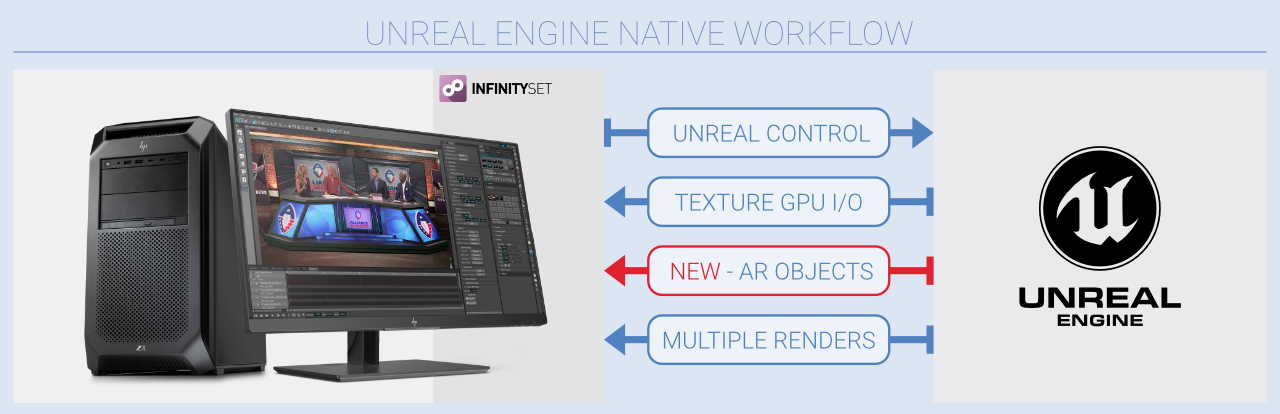

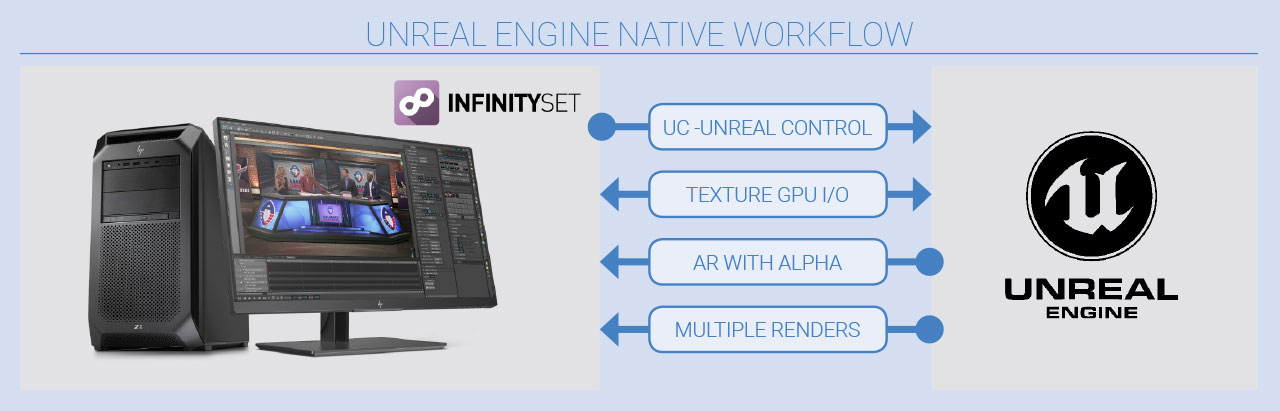

InfinitySet goes Unreal-native

InfinitySet features an Unreal-native behavior, so it can achieve anything Unreal Engine provides. The added value of this configuration is the multitude of benefits of including more than 25 years of Brainstorm’s experience in broadcast and film graphics, virtual set and augmented reality production, including data management, playout workflows, virtual camera detach, multiple simultaneous renders and much more.

With InfinitySet 4, Unreal projects can indistinctively be background or foreground within InfinitySet, and with 100%-pixel accuracy guaranteed. This opens up the door to create AR with Unreal Engine with total ease.

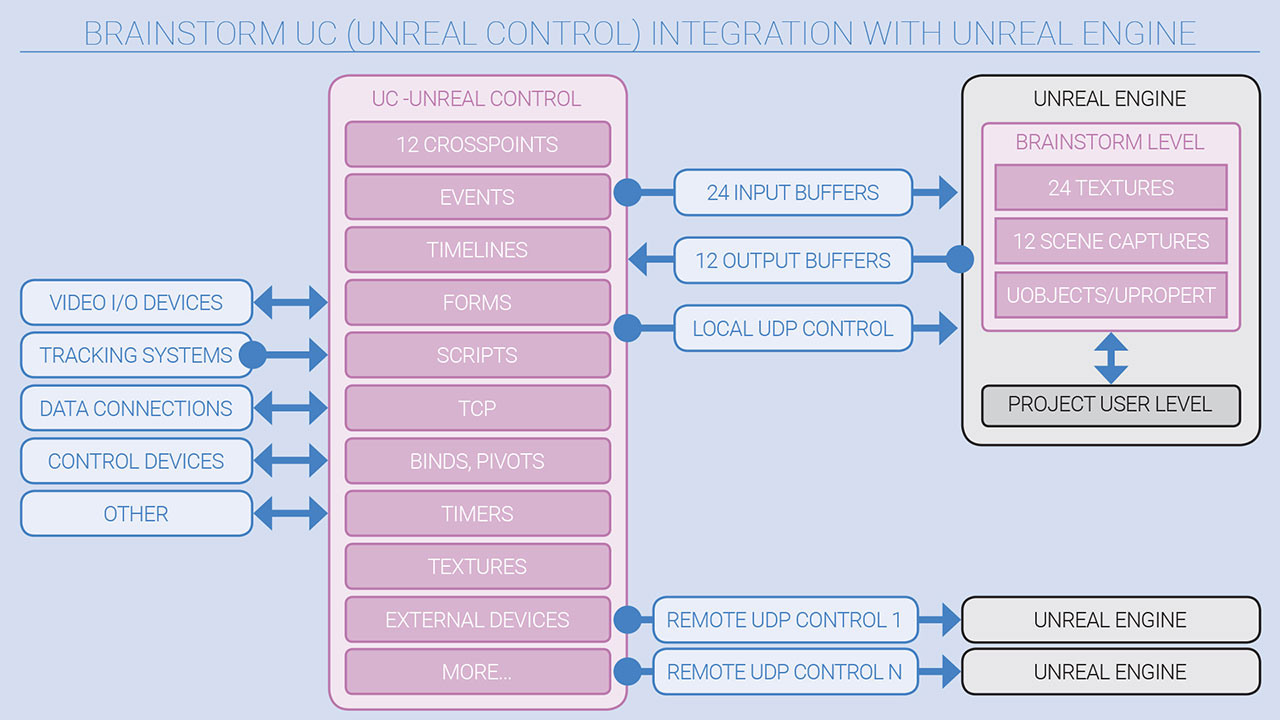

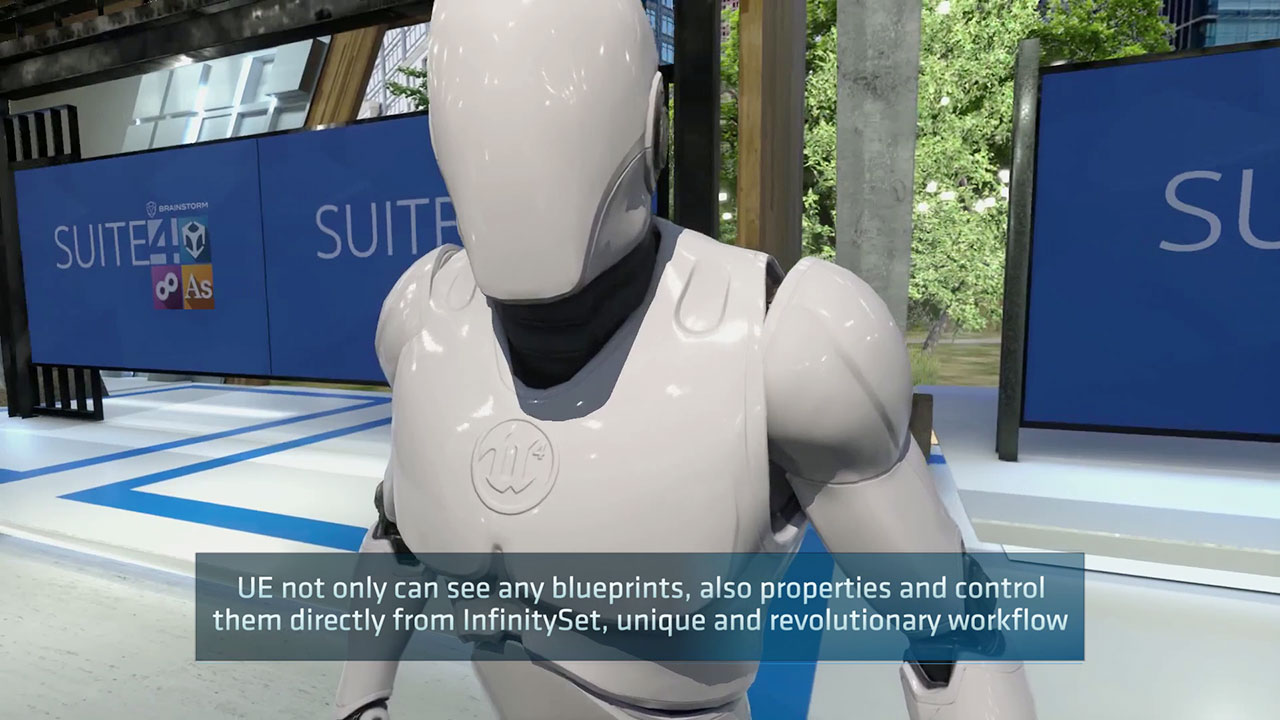

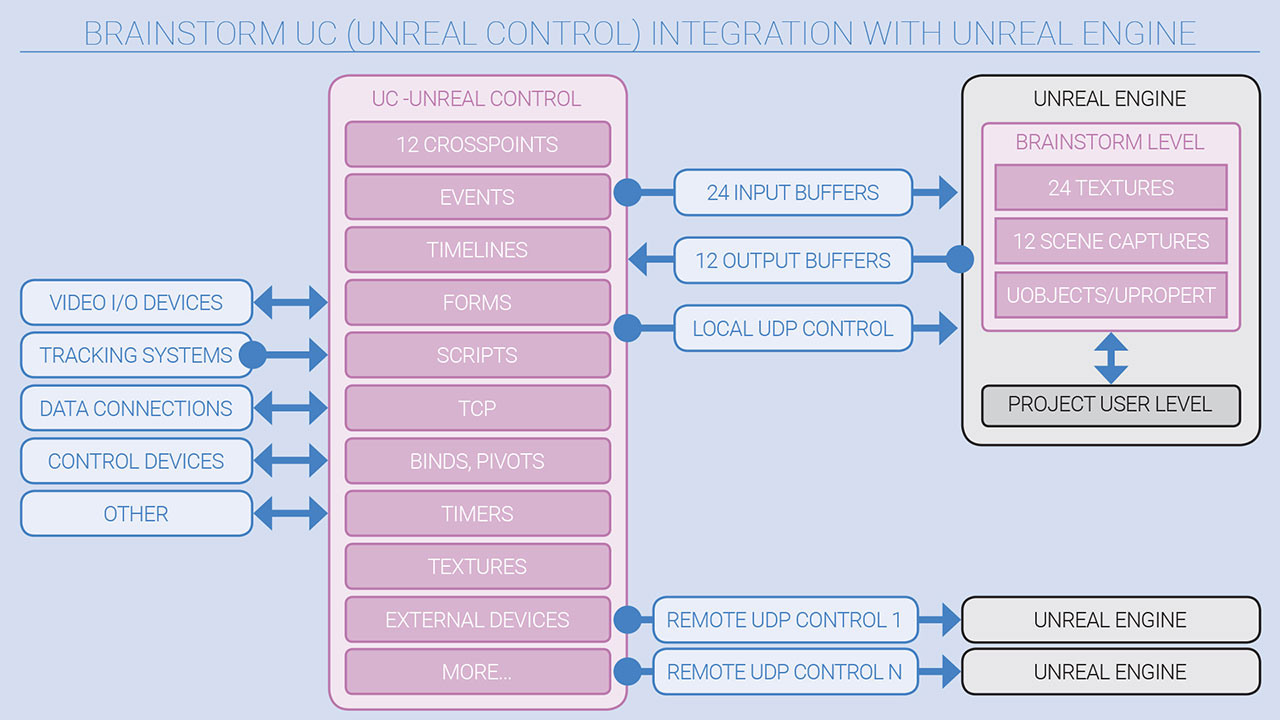

Unreal Control

InfinitySet also adds a new control layer, a dedicated, user-friendly control interface. The Unreal Engine Control can see any blueprints, objects and properties in the UE project, and control them directly from InfinitySet, which results in a new, unique and revolutionary workflow that does not require to previously prepare blueprints for every action in UE.

Unreal Engine Control works in both directions, so it can also transfer any input to UE to use it as a texture within a UE object, like Live video, including chroma keyed talents, movies and playlists, still images, Aston projects with StormLogic or regular textures.

Camera detaching in Unreal Engine

Unreal Engine renders are now compatible with the acclaimed Camera Detaching feature of InfinitySet, so that Unreal can take advantage of the TrackFree™ technology. Users can create a virtual camera from a live tracked camera view within Unreal Engine.

Templates and data-driven graphics within Unreal Engine

The Combined Render Engine has been the best and most transparent solution to include statistics, charts, text and other broadcast graphics within UE generated content. InfinitySet 4 goes one step beyond, being able to send Aston projects directly to UE as textures, including its StormLogic, or alternatively using an Aston as a layer over UE.

Enhanced broadcast hardware compatibility for Unreal Engine

Brainstorm enjoys more than 25 years of experience in broadcast and film, so InfintiySet is compatible with most broadcast hardware and workflows, and can control and be controlled by external hardware via GPI and other industry-standard protocols. Brainstorm products are, off the shelf, compatible with most vendor’s video cards, GPUs or tracking devices and protocols.

Because of Brainstorm’s hardware compatibility, broadcasters can include UE-based virtual and AR content within their standard workflows, seamlessly. InfinitySet provides additional support for video hardware devices, including support and driver updates for Brainstorm supported video boards and to virtually any tracking device, not to mention other devices like mixers, cameras, automation, capture devices and many more.

TrackFree™

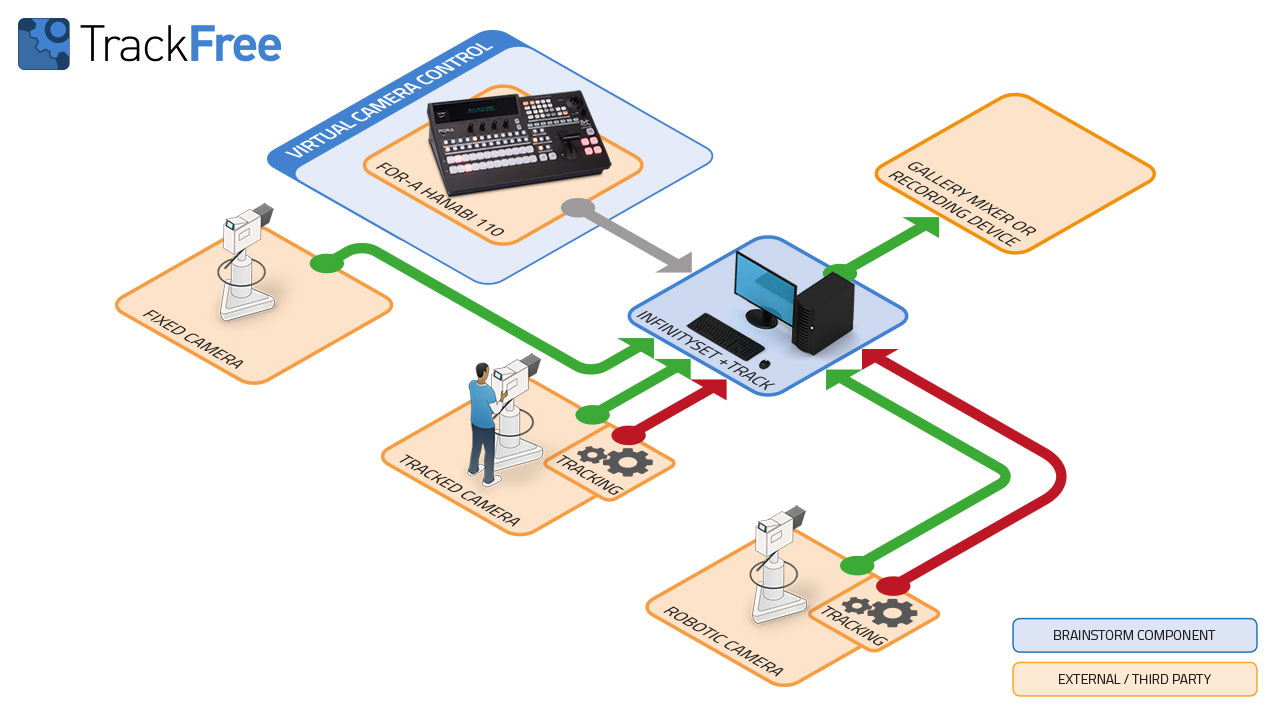

TrackFree is a patented camera-tracking independent technology that provides just what its name claims: the total freedom for operators to use any tracking system, trackless or fixed cameras, or a combination of these at the same time.

Combined Chroma Keying

TrackFree ™ allows users to choose the keying method of their choice, by using InfinitySet’s internal chroma keyer, an external chroma key hardware or a combination of both, even within the same production.

Advanced TrackFreee™ features

- TeleTransporter

- 3D Presenter

- Virtual shadows and selective defocus

- Virtual Camera detaching

- MagicWindows: VideoGate & VideoCAVE

- Dynamic Light Control

Advanced Internal Chroma Keyer with Differential CK and UE Keyer

InfinitySet features an advanced internal, resolution independent Chroma Keyer, supporting controls for main key, spill correction, detail recovering and colour correction in different color spaces.

InfinitySet automatically creates a secondary key for feet tracking, independent from the main one. This key permits the calculation of the talent’s feet position for the FreeWalking feature and for creating virtual shadows and reflections.

Brainstorm has fully redesign the keyer’s algorithm, with improved precision in all color spaces, while adding new features that facilitate the keying process also for non-expert users. InfinitySet and eStudio now include a number of presets that create an automatic, single-click keying to start with, which can be further refined by tweaking any values as required.

Brainstorm has introduced a new feature that allows for creating a differential chroma key (called RefImage). The differential chroma key is made based on an image of the chroma screen without the talent, which creates a keying map which easily discriminates the talent once placed in the chroma set, by looking at the difference between the “clean” chroma and the footage with the talent. By using RefIMage the shadows in the chroma set are more easily extracted from the footage.

On top of all the above, InfinitySet now includes a new keying mode that utilizes the Unreal Engine keyer. This opens the door for users that feel comfortable with the UE keyer to take advantage of this technology inside the Brainstorm environment.

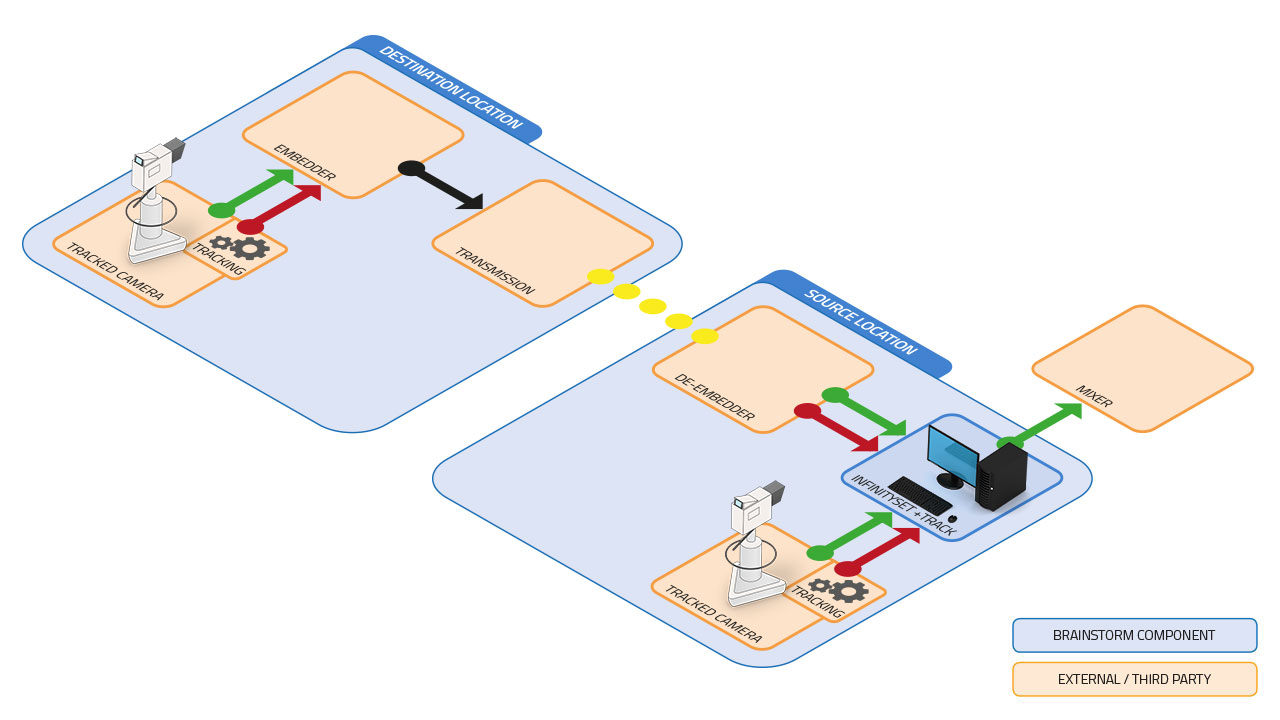

TeleTransporter

TeleTransporter seamlessly combines virtual sets with live or pre-recorded video feeds of the presenters and 3D objects from remote locations, all moving accordingly with precise perspective matching. TeleTransporter allows a remote talent to enter any scenario at any time, while seamlessly mixing real and virtual elements.

Tracking data coming from real cameras or third-party tracking software can be included as metadata in live feeds or pre-recorded videos, and immediately be transferred and applied to the virtual camera’s parameters.

3D Presenter

Converts the live feed of the talent into a true 3D representation of the talent from a video feed. The presenter becomes an actual 3D object embedded within the virtual set, casting real shadows and reflections of the talent over virtual and real objects and interacting with volumetric lights.

HandsTracking

HandsTracking allows presenters to trigger animations, graphics or data with a simple movement of the hands and without the need for any additional costly and complex tracking devices. InfinitySet features an optimized usability and also supports StormLogic from Aston graphics.

FreeWalking

FreeWalking enables talents to move freely around the green screen theatre matching their movements within the 3D space. Presenters are allowed to move forward, backwards and sideways with precise perspective matching even though the real camera is in a fixed position.

Real Set Virtualization

Thanks to the TrackFree™ technology and its TeleTransporter feature, InfinitySet can undistinguishably use real, live or pre-recorded footage as the background set for the chroma keyed talent. This allows for enhancing the corporate image of a large broadcaster, as it can reuse a single real set to be the background scenario for smaller stations in the network.

Virtual shadows and selective defocus

InfinitySet can create virtual shadows in addition to the real keyed shadows and apply then to virtual surfaces. Using the real camera’s parameters, InfinitySet is able to create a selective defocus and bokeh effects on the virtual scene adding an even more realistic effect to the final rendered composition.

Virtual Camera detaching

Set space restrictions are no longer a problem. Regardless the camera used is fixed, manned, tracked or robotized, InfinitySet can seamlessly detach the camera feed while maintaining the correct position and perspective of the talent within the virtual scene.

MagicWindows: VideoGate

VideoGate allows to integrate the presenter not only in the virtual set but also inside additional content, meaning we can make the presenter travel between real worlds, virtual worlds or between real and virtual scenes.

VideoGate extends the virtual scenario beyond the virtual set and creates an infinite combination of worlds for the presenters to be in, allowing for better real-time content creation possibilities.

MagicWindows: VideoCAVE

VideoCAVE feature is a Mixed Reality application which make monitors on a real or virtual set to behave as a window, with virtual elements seen with the perspective of the camera, as if the screens were windows to the outside world.

Also, VideoCAVE allows for including additional features such as a Touch Screen for interactivity, and can be combined with the VideoGate feature, with objects coming in/our of the screens.

Dynamic Light Control

InfinitySet InfinitySet can remotely control and adjust in real-time external light panels via DMX, and external Chroma Keyer settings, allowing for changing the lighting conditions of the real set to match those of the virtual set.

FORMS: Custom interface creation

FORMS allow for creating purpose-built interfaces, showing only the tools required for a given project. So, FORMS are effectively custom interfaces created for a project, that control whatever is required in such project, and allowing for customizing the resulting graphics as required.

This is especially useful in live production environments like entertainment shows or quizzes, where additional logic is required to display specific questions, select the correct answer and the subsequent actions, and much more.

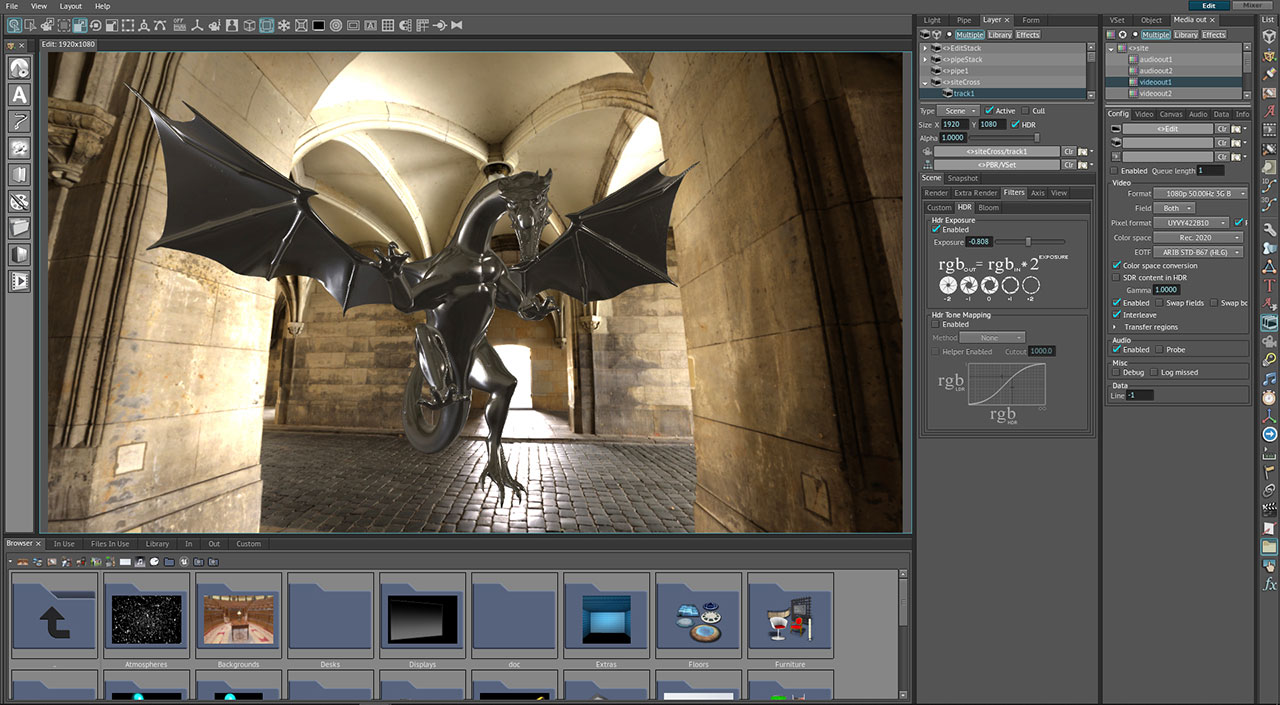

Advanced rendering

The integration between the real and virtual objects and environments is essential, so the next step in virtual set production and Augmented Reality applications is to increase the realism of the content. This involves high quality rendering and the perfect integration between the different elements of the scene to provide a sense of realism.

Along with advanced rendering features such as real-time ray tracing, PBR or HDR, Brainstorm fully supports gaming engines like Unreal Engine, providing photorealistic scenes in any resolution

Real-time ray tracing

InfinitySet takes full advantage of the latest hardware developments found in NVIDIA RTX GPU technology. By using NVIDIA Quadro RTX GPUs, InfinitySet can deliver real-time ray tracing, which provides a much more accurate rendering, especially with complex light conditions.

PBR and HDR

InfinitySet fully supports PBR shaders as materials, which can also be imported from external shader editing software like Substance and other material editors.

HDR allows for rendering wide-gamma pictures. InfinitySet can render floating 16 bit per channel/component, supporting for P2020 gamma correction output. This allows for post-rendering exposure control and extended-range filtering.

Unreal Engine workflow

InfinitySet fully supports Unreal Engine, taking advantage of the benefits of what such game engine provides in terms of rendering quality. The added value of this configuration is the multitude of benefits of including more than 25 years of Brainstorm’s experience in broadcast and film graphics, virtual set and augmented reality production, including data management, playout workflows, virtual camera detach, multiple simultaneous renders and much more.

With InfinitySet, UE projects can indistinctively be background or foreground, and with 100%-pixel accuracy guaranteed. This opens up the door to easily create AR with Unreal Engine, in addition to virtual set production.

Unreal Control

InfinitySet also adds a new control layer, a dedicated, user-friendly control interface. The Unreal Control can see any blueprints, objects and properties in the UE project, and control them directly from InfinitySet, which results in a new, unique and revolutionary workflow that does not require to previously prepare blueprints for every action in UE.

Unreal Control works in both directions, so in addition to the control of blueprints, objects and properties from InfinitySet’s interface, it can also transfer any input to UE to use it as a texture within a UE object.

Beyond game engines: Combined Render Engine

What Brainstorm offers goes far beyond what a game engine can do by itself. Broadcast graphics workflows have specific requirements, like database connections, statistics, tickers, social media or lower-thirds, a variety of hardware and software elements that are alien to the game engine framework but essential for broadcast operation.

Brainstorm’s approach to external render engine support is unique in the industry, as it provides alternatives for using different render engines so users can achieve anything they might require. Brainstorm’s initial approach to using game render engines combined its renowned eStudio render engine with the Unreal Engine in a single machine, and it is called Combined Render Engine.

In addition to the new developments and the improved UE compatibility and UE-native workflows, the Combined Render Engine approach allows users to select the alternative that fits best with their requirements or workflows.

InfinitySet’s TrackFree™ technology allows for using tracked, fixed cameras or any combination of them to shoot the talent on the set, indistinctly using internal or external chroma keyers.

Virtual production

Visual effects are as old as movies, and as technology evolved, “analogue” visual effects, stop motion and shooting in studio over moving cycloramas or painted backgrounds were replaced by digital effects. However, taking so much of a movie to post-production requires time and money, and as the minutes to be included into post increase, so does the budget. Therefore, the challenge is to reduce the post-production budget by bringing in more finished shots or by requiring less time into post.

Brainstorm technology has been used in film for real-time pre-visualization of the green screen shots in lower resolution to ensure the synthetic elements match with the real ones, simplifying post-production. Brainstorm pioneered this in movies such as A.I. (2001) or I Robot (2004), and further developed in later productions. This technology reduced the post-production time ensuring the shots were accurate and post artists did not require to dedicate time to fix issues, but to finish the shot. Now, as broadcast tends to 4K and beyond and so does film making, InfinitySet’s real-time technology allows for creating virtual scenes out of green screen shooting that are good enough to match the quality of film and high-end drama production, further reducing post-production time and increasing flexibility when shooting.

Traditionally, production takes care of shooting (outdoors, in studio or in chroma sets), lighting and other, while post-production involves editing, grading and VFX, which includes chroma keying, tracking, focus matching, computer VFX, compositing and much more, all significantly time-consuming.

Real-time post-production

Although chroma keying technology and virtual sets have been around for a long time, the latter have sometimes been criticized for the relative lack of realism compared to other non-real-time applications such as composition and VFX technologies.

The ability of InfinitySet to work as a preview hub, allows for substantial savings in the costs of filming and post-production, ensuring the different shots are adjusted (chroma, camera movements, tracking, etc) prior to enter in post-production, and even directly delivering the final production master.

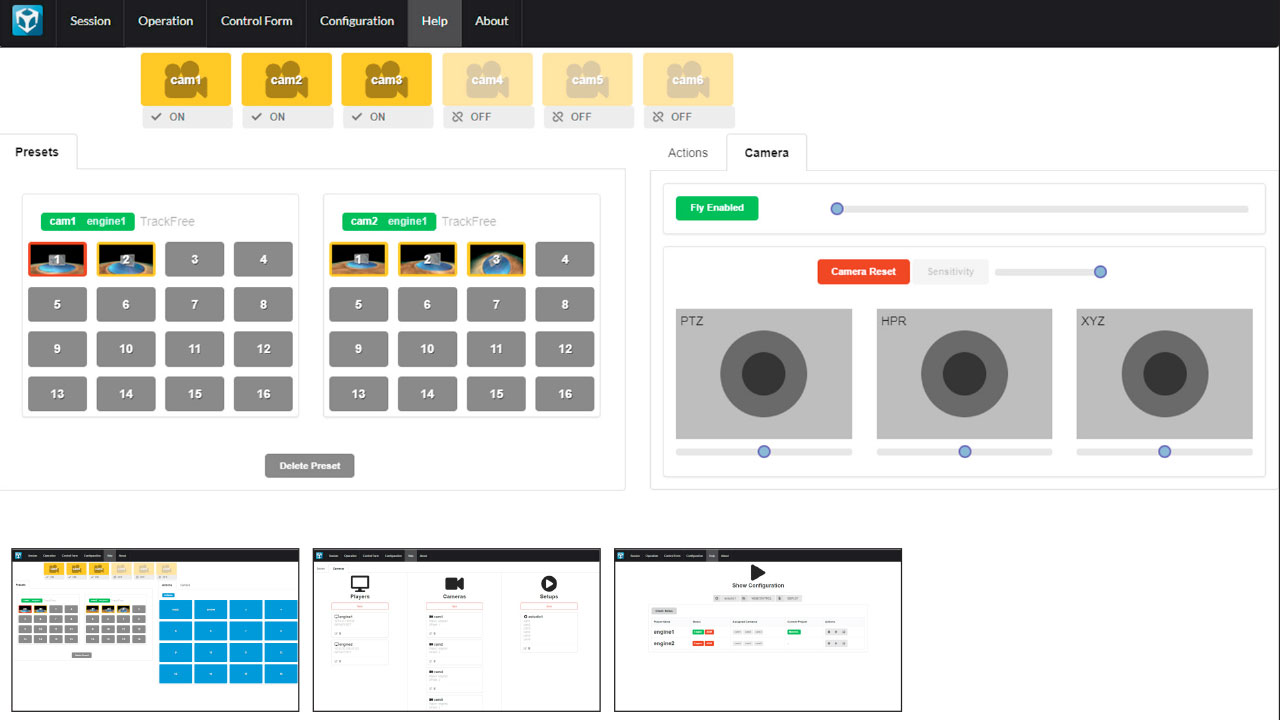

Web Control

Brainstorm’s Web Control is an independent, HTML5-based application designed to control the playout mode of InfinitySet.

As it has been developed with flexibility in mind, it can sit on the InfinitySet workstation or alternatively in a remote computer, separated from the workstations, allowing for the remote control of virtual sets, camera presets, actions, transitions, or graphics directly from its GUI. The Web Control interface can be accessed using a standard web browser, and provides as such an easy-to-use interface, not only from desktop computers, but also from tablets.

Web Control Main Features

- Replacement for an InfinitySet Player remote workstation, including the Remote control of most InfinitySet features:

- WebControl can trigger predefined Camera Presets.

- Camera parameters and positions can be controlled directly from the Web Control interface.

- Direct adjustment of Chroma key settings from the WebControl interface.

- Management and control of InfinitySet’s playlists directly from the WebControl. Additional media can also be added to existing playlists from the resources folder.

- Trigger Actions when present, so users can control the pre-defined Actions buttons.

- Control of several InfinitySet workstations via Ethernet .

- Can sit in the same workstation of the InfinitySet or in a separate computer, and connect to one or several InfinitySet workstations, read their project’s configuration and load the for its controlling, in sync with the software mixer.

- Allows for full editing of Forms. The user-customizable Form interface is fully accessible from the Web Control interface, allowing the user to control their custom interfaces as if they were inside of InfinitySet

- Remote launching of InfinitySet. Web Control, when paired with the ADM software and license, can launch InfinitySet remotely, on all configured render engines.

- Users can create Custom Setups, which can include all or only select render engines for use, allowing for the creation of show/production specific setups that the operator can choose from.

Internal software mixer

InfinitySet features a software-based production mixer for enhanced production functionality such as full control of all the virtual cameras, with non-linear transitions such as Cut-Fade-Wipes and Flies between 3D cameras, plus controlling actions and objects. InfinitySet also features an optional hardware controller for enhanced program production, including manual control of transitions.

FOR-A Hanabi HVS 110 hardware control mixer

As an option, InfinitySet can use the FOR-A Hanabi HVS-110 to take control of the software mixer, but also maintaining all the production mixer/switcher capabilities of the Hanabi. So, InfinitySet can be used as an input device with full 3D capabilities with the added benefit of being fully controlled by a broadcast mixer, which still uses its mixer capabilities apart from InfinitySet.

Third-party mixers

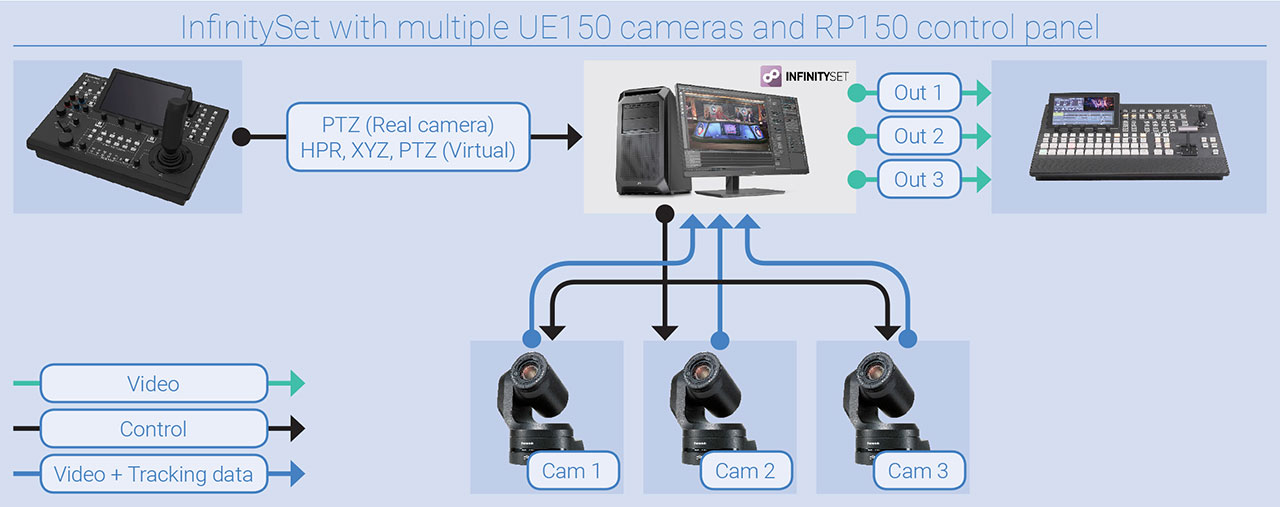

InfinitySet is fully compatible with the AW-UE150 PTZ camera, and also with the RP150 Camera Control Panel. This means that InfinitySet can use the video and tracking data from the PTZ camera and also the Camera Control Panel can manage the virtual cameras within InfinitySet, along with the real PTZ cameras. From the Camera Control Panel, real (UE150) and virtual (InfinitySet) cameras are controlled, and the tracking data from the PTZ cameras is received per frame by InfinitySet.

Turnkey system

InfinitySet is delivered as a complete, turnkey system ready to use, fully and immediately integrable in any broadcaster’s workflow. Using standard broadcast plus complete IT connectivity, all Brainstorm products are prepared to work at its best since day one.

Licenses

This license is ideal for high-spec requirements as it includes enhanced TrackFree Technology, –TeleTransporter, 3D Presenter and HandTracking– and it is able to work with tracking devices.

This other license is meant for mid-range studios. It also features TrackFree Technology, but only includes 3D Presenter and HandTracking.

Hardware Recommendations

- HDD 500GB (OS)

- SSD 256GB (DATA)

- HP Serial Port Adapter Kit

- HP USB 1000dpi Laser Mouse

- Low 1 input, 1 output(fill)

- Medium 4 input, 1 output (fill) (3 input fill/key)

- High 4 or more inputs, at least 1 output (fill)

- 4K30p 2 input, 1 output (fill) (6G)

- 4K60p 1 input, 1 output (fill) (3G 4 quadrants)

- Laptop 1 input, 1 output (fill/key) (no 720p)

| Tier | Model | CPU | GPU | RAM | SDI |

| Low | z4 | W2103 | GTX1070 + HP Z4 Fan and Front Card Guide Kit | 16GB | 2 x BM Decklink 4K (Level: B) |

| Medium | z4 | W-2135 | RTX5000 | 32GB | Corvid 88 (Level: C) |

| High (Unreal) | z4 | W-2145 | RTX6000 | 32GB | Corvid 88 (Level: C) |

| 4K30p | z4 | W-2145 | RTX6000 | 32GB | BM Decklink SDI 4K + BM Deklink 4K Extreme (Level: C) |

| 4K60p | z4 | W-2145 | RTX6000 | 32GB | Corvid 88 (Level: C) |

| Ultra | z8 | W-2145 | RTX6000 | 32GB | Corvid 88 (Level: C) |

| Laptop | zbook 17 G6 | i7-9850H | RTX5000 | 32GB | Blackmagic ultrastudio 4K (Apple TB3 Adapter) (Level: B) |

Aja

- Kona 3 (Aja 2Ke)

- Kona 3G

- Kona 3G Quad

- Kona 4

- Corvid 88

- Corvid 1

- IO 4K

- Corvid 44

- Corvid 44 12G (Corvid 44 8KMK)

Blackmagic

- Decklink HD Extreme 3

- Decklink HD Extreme 3D

- DeckLink 4K Extreme

- DeckLink 4K Pro

- DeckLink 4K Extreme 12G

- DeckLink 8K Pro

- DeckLink SDI

- DeckLink SDI 4K

- DeckLink Duo

- DeckLink Quad

- DeckLink Quad 2

- DeckLink Mini Recorder

- DeckLink Mini Monitor

- UltraStudio Mini Recorder

- UltraStudio Mini Monitor

- UltraStudio SDI

- UltraStudio 4K

- UltraStudio Express

- Intensity Shuttle

- Intensity Pro

- Intensity Pro 4K

Bluefish

- Bluefish Epoch Neutron (Type B)

- Epoch | 4K Neutron (model B)

- Bluefish Epoch 4K Supernova S+

- Bluefish Epoch Supernova CG

For-A

- For-A MBP12

- For-A MBP-2144

Matrox

- Matrox X.mio2 and X.rio

- Matrox X.mio3